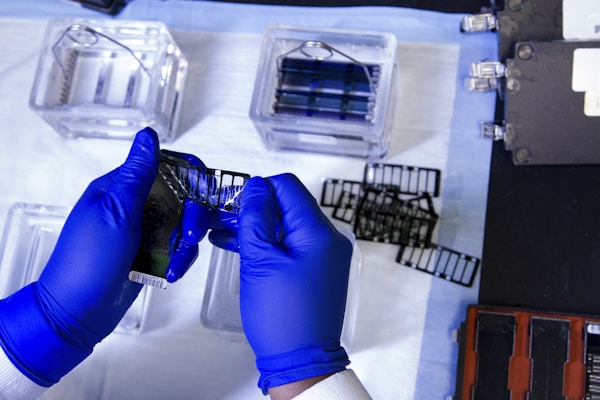

Evaluating the accuracy of genetic tests is an important step in ensuring that the results of these tests are reliable and can be used to diagnose and treat diseases. There are a variety of different approaches that can be used to evaluate the accuracy of genetic tests, each with its own strengths and weaknesses. In this blog post, we will explore the different approaches used to measure the accuracy of genetic tests and discuss which is most appropriate for different types of tests.

The Process of Validation

One of the most common methods of evaluating the accuracy of genetic tests is through a process called validation. Validation is a process of testing a sample of a population to determine the accuracy of a genetic test. This method is particularly useful for tests that are used to diagnose or treat a specific disease or condition, as it allows researchers to measure the accuracy of the test in detecting or predicting the disease or condition. Validation typically involves comparing the results of a genetic test to those of a “gold standard” test, such as a traditional laboratory or clinical test. Validation is also used to assess the accuracy of genetic tests that are used to predict risks of certain diseases or conditions.

The Process of Calibration

Another approach to evaluating the accuracy of genetic tests is through a process known as calibration. Calibration is a process of measuring the consistency of a test over time, which is particularly important for tests that are used to predict risks of certain diseases or conditions. This approach is often used in conjunction with validation, as it allows researchers to measure the accuracy of a test over time and ensure that it remains consistent.

The Process of Cross-Validation

In addition to validation and calibration, another approach to evaluating the accuracy of genetic tests is through a process known as cross-validation. Cross-validation is a process of testing a sample of a population with different versions of the same test, or by comparing the results of a genetic test to those of a “gold standard” test. This approach is often used to assess the accuracy of tests that are used to diagnose or treat a specific disease or condition, as it allows researchers to measure the accuracy of the test in different populations and ensure that it is consistent across different populations.

The Process of Benchmarking

Finally, the accuracy of genetic tests can also be evaluated through a process known as benchmarking. Benchmarking is a process of comparing the results of a genetic test to those of a “gold standard” test, or a set of results from a similar test. This approach is often used to assess the accuracy of tests that are used to predict risks of certain diseases or conditions, as it allows researchers to measure the accuracy of the test in different populations and ensure that it is consistent across different populations.

In conclusion, there are a variety of different approaches that can be used to evaluate the accuracy of genetic tests. Validation is often used to assess the accuracy of tests that are used to diagnose or treat a specific disease or condition, while calibration and cross-validation are often used to assess the accuracy of tests that are used to predict risks of certain diseases or conditions. Benchmarking is also used to assess the accuracy of tests that are used to predict risks of certain diseases or conditions. Each approach has its own strengths and weaknesses, and the best approach for a given test will depend on the type of test and the population being tested.